Data House Guide

Follow

Introduction

Introduction

DFINERY Data House is a function that allows you to combine data collected with the DFINERY SDK and customer data by uploading data already collected or owned by the customer to the DFINERY console.Through this, you can control your customer's data from the DFINERY console and check it with reports.

Data type

There are two main types of data that can be uploaded to Data House.

Event Log Table

Event log data is a table composed based on time series data extracted from the customer's internal DB.This data is integrated in the CDP service and managed systematically over time, and is used for various purposes such as analysis, visualization, and personalization.

Example

- Event log executed by customer app

-

Attribution logs collected by the client’s app itself or collected by a third party

Meta Table

Metadata is a table containing information such as unique ID, name, and owner extracted from the customer's internal DB.This table is used to manage key information for data identification and to maintain data quality and accuracy. The metadata composed in this way is combined with event log data and effectively utilized for various business purposes in the CDP service.

Example

- Table containing information such as age/gender/address/VIP status based on user ID

Create Event Log Table

1.Basic setting

Enter the name and brief description of the data table and set the type. The data storage type must be set to “ Event Log Data ”.

2. Event log data main key settings

Set the data primary key to use when uploading from the Event Log Table.

Time Key

Sets the time entry for event log data.

※ This is a required key and null values are not allowed.

| division | Justice |

|

Key Name |

Sets the name of the Time Key.

|

| Type |

Sets the type of data.

|

| Display Name | Set the name that will be displayed in the DFINERY console. |

| Description | Enter a description for the key. |

Deduplication Key

Set a key to validate data. Remove duplicate items based on the data of that key. For example, if you modify a Row added in the past, only data with the same date (based on UTC) will be updated based on the Deduplication Key.

※ This is a required key and null values are not allowed.

| division | Justice |

|

Key Name |

Sets the name of the Deduplication Key.

|

| Type |

Sets the type of data.

|

| Display Name | Set the name that will be displayed in the DFINERY console. |

| Description | Enter a description for the key. |

3. data design

Set the data columns to be uploaded based on the set Time Key/Deduplication Key. Columns are divided into fixed/semi-fixed types depending on the item type, and up to 1,000 can be set.

Item Type

Set whether to save data based on item type.

-

fixed type

When adding a column, only data of the data type set is saved. -

Semi-fixed

Saves data when data names and data types that start with a specific name are matched.-

Prefix Rules

Column name prefix: data type - Example) If the column name is set to category_string

-

Result) All data entered with column names such as category_1:string / category_2:string / category_3:string are saved.

-

Prefix Rules

Add column

Set the data of the column to be added.

| division | Justice |

|

Key Name |

Sets the key name of the data column.

|

|

Identifier settings (optional) |

You can use the generated column as an identifier to use it as an audience segment.

|

| Type |

Sets the type of data. The column data type supports the following five types.

|

| Display Name | Set the name that will be displayed in the DFINERY console. |

| Description | Enter a description for the key. |

| Whether to set required value | Set whether the value is required or not. |

Create Meta Table

1.Basic setting

Enter the name and brief description of the data table and set the type. The data storage type must be set to “ Metadata ”.

2. Set metadata primary key

Set the data primary key to be used when uploading from the Meta Table.

Unique Key

Set the main key when uploading data in the Meta Table. Duplicate data is removed based on the data corresponding to this key.

※ This is a required key and null values are not allowed.

| division | Justice |

|

Key Name |

Set the key name of the Unique Key.

|

| Identifier settings (optional) |

You can use the generated column as an identifier to use it as an audience segment.

|

| Type |

Sets the type of data.

|

| Display Name | Set the name that will be displayed in the DFINERY console. |

| Description | Enter a description for the key. |

Version Key

If the same Unique Key value comes in, the Version Key value is updated based on the larger value.

※ This is a required key, and null values are not allowed.

| division | Justice |

|

Key Name |

Sets the key name of the corresponding data column.

|

| Type |

Sets the type of data.

|

| Display Name | Set the name that will be displayed in the DFINERY console. |

| Description | Enter a description for the key. |

3. data design

Set the columns of data to be uploaded based on the set Unique Key/Version Key. Up to 1,000 columns can be set.

Add column

Set the data of the column to be added.

| division | Justice |

|

Key Name |

Sets the key name of the data column.

|

|

Identifier settings (optional) |

You can use the generated column as an identifier to use it as an audience segment.

|

| Type |

Sets the type of data. The column data type supports the following five types.

|

| Display Name | Set the name that will be displayed in the DFINERY console. |

| Description | Enter a description for the key. |

| Whether to set required value | Set whether the value is required or not. |

Data Pipeline

Once the data table setup is complete, upload the table's data.

There are two main ways to upload data:

data upload

SFTP

Upload data using a file transfer client such as Filezilla. Only csv files are supported. When uploading a file, you must create a folder with the folder name specified in the DFINERY console and upload the data file to that folder.

File upload

Upload files directly. Only csv files are supported.

data processing

Error message type

| error message | explanation | solution |

| No Data | empty file |

This is an empty file.

Please check the contents of the file. |

| File {file name} is not found or duplicated. | File not found | Please upload the file again. |

|

Required Field Missing: {string.join(”,”, missing primary key)}

|

Header main key missing | Fill in the main key values in the CSV header. |

|

CSV Error: Row is too long - Record(line {line number}): {1,000 characters of the contents of the row}

|

Row length limit exceeded |

Please enter a row with less than 10 million characters (make sure it is in the correct CSV format).

Please edit that line. |

|

CSV Error: Failed to validate schema( primary key [{missing primary key}] is required)

|

Missing row primary key | Fill in the main key values in the CSV row. |

|

CSV Error: The num of fields is less than the number of header's fields, Record(line {line number}) : {content of that row}

|

than the number of columns in the header

Rows have fewer columns |

Number of columns in CSV header and row do not match.

Please edit that line. |

|

CSV Error: Empty required field(Col: {Missing column name}, Expected Type: {Data type}, Format: {Format if date format}) - Record(line {Line number}): {Contents of the corresponding row}

|

Missing required column value |

A required column value is missing.

Please edit that line. |

|

CSV Error: Failed to parse(Col: {Missing column name}, Expected Type: {Data type}, Format: {Format if date format}, Actual Value: {Value included in actual row}) - Record(line {Line number}): {Contents of the row}

|

Data parsing error |

Data types do not match.

Please edit that line. |

|

CSV Error : {etc}CSV Error : {Exception message} - Record(line {line number}) : {content of the corresponding line}

|

Other CSV parsing failures |

This problem occurred due to other issues.

Please edit that line. |

special case

1.When the number of header columns in a specific row is exceeded

Name, Age

John,30,male

In the example above, the male data is ignored because it exceeds the number of header columns.

2. Escaping double quotes ( using two consecutive double quotes within double quotes )

Name, Note

John,"He said, ""I am happy."""

To express a double quotation mark within a double quotation mark ("), you must use two consecutive double quotation marks ("").

Unable to process

1.Contains mismatched double quotation marks (") (regardless of whether there is a newline or not)

Name, Note

John," he said, "I am happy."

In the example above, the double quotes in the memo field are mismatched, so this format cannot be processed.

2. If the double quote escape is not surrounded by double quotes (")

Name, Note

John,"He said,""I am happy.""

Text escaped with double quotes (") must enclose the entire field in double quotes.

3. Double quote backslash escape

Name, Note

John,"He said, ∖"I am happy.∖""

CSV does not support escaping with a backslash (∖). Therefore, this form cannot be processed.

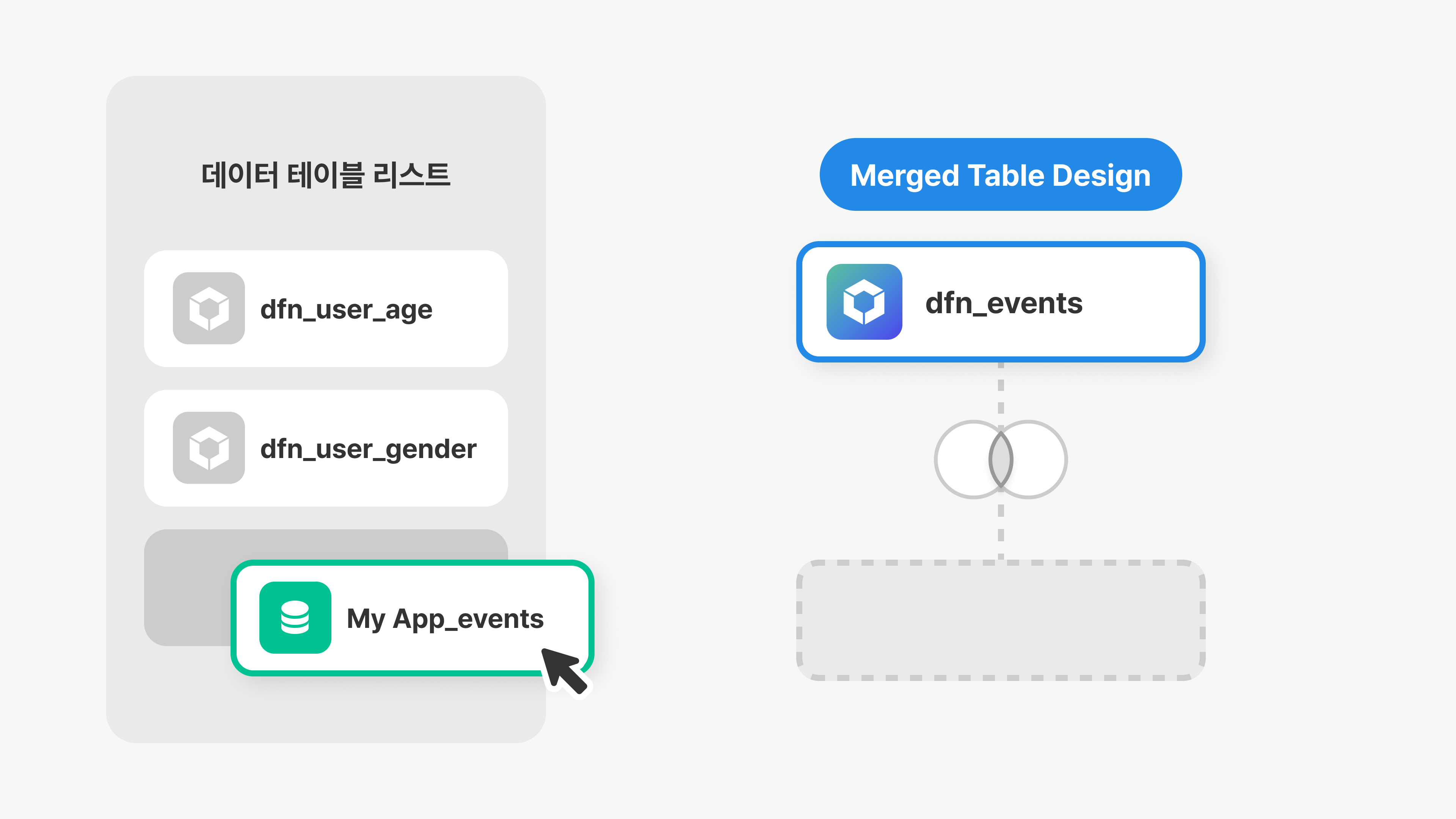

Merged Table

Uploaded data can be merged using the Merged Table function.

Table merging proceeds as follows.

1.Drag the created Event Log or Meta Table to add it to Merged Table Design.

2. Click ❗ (exclamation mark icon) in the middle as shown in the screenshot below.

3. Set criteria for merging.

Join Type is as follows:

-

Left Outer

All items in the table set on the left are sick even if they do not meet the conditions. -

Full Outer

Merges all table data set to left/right regardless of conditions. -

Inner

Combines only data common to left/right tables.

4. After merging, check the results by previewing the table.

How to use your data

Data uploaded through Data House can be checked as a separate report in Analytics.